Cognitive Bias and Cognitive Shortcuts

The human brain is immensely capable, but it has limitations. Two of these limitations are cognitive bias and cognitive shortcuts.

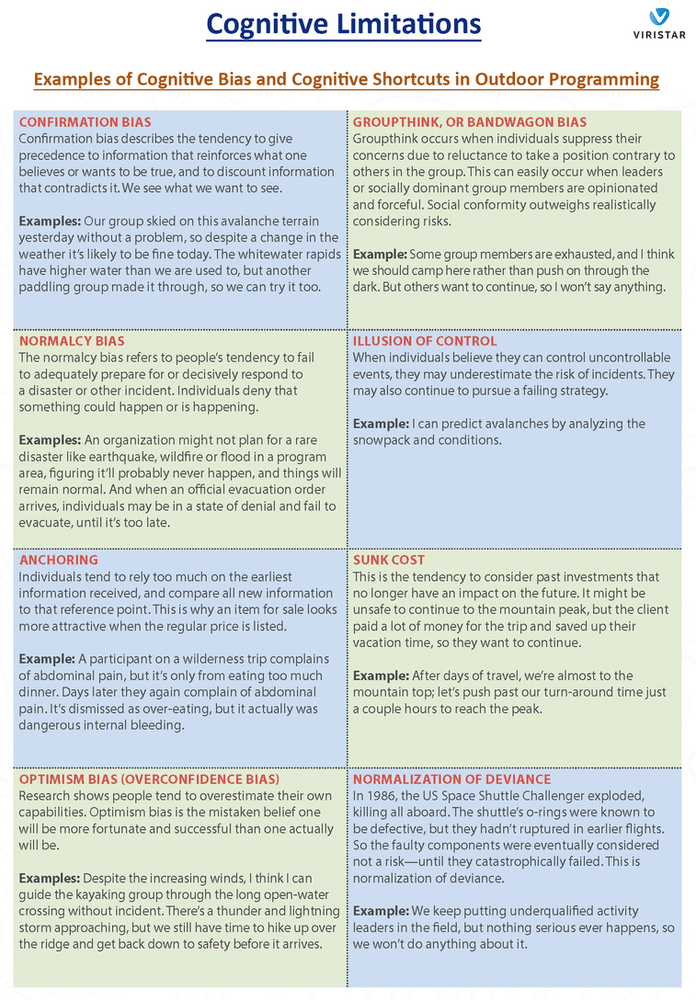

Cognitive biases are systematic errors in thinking that lead to poor decisions. These include faulty thought processing regarding factors such as attention, memory, and attribution. Cognitive biases arise out of deep-rooted psychological traits: a psychological desire to view ourselves positively, a reluctance to change beliefs, and our tendency to be influenced by others. Dozens of cognitive biases have been described. There is no universal list or classification of these biases.

Cognitive shortcuts are assumptions, or generalizations about the way things are, that are often but not always true. Because the world is complex and presents a sometimes overwhelming amount of information to process, with too many possible decisions to contemplate, these mental shortcuts help us arrive at decisions quickly and efficiently. But although they are often useful, these assumptions, also called “heuristics,” cause problems when they turn out to be not accurate. The role of heuristics in making poor judgments was popularized by researcher Daniel Kahneman, who won the Nobel Prize

in Economic Sciences for this work. Cognitive shortcuts can sometimes lead to cognitive biases.

Cognitive biases and cognitive shortcuts are sometimes confused with logical fallacies, but they are different. Cognitive limitations have to do with weaknesses in our default patterns of thinking. Logical fallacies, for example circular reasoning, are errors of reason in a logical argument.

Treatment

Cognitive limitations are built into how human brains function. There’s no way to eliminate them that’s known to be effective. There are ideas about how to mitigate their impact, but research demonstrating the effectiveness of these ideas is lacking. Nevertheless, some approaches to consider include:

Education. Understanding more about the weaknesses in human thinking processes may help, although it appears to have limited effectiveness. Case studies and role-playing with structured group discussions may be useful, research suggests. Disseminating “lessons learned” and narratives of previous incidents may reduce optimism bias.

Rules. This avoids having to make high-consequence decisions in stressful situations like emergencies, when thinking is compromised. Rules provide structure to replace emotion and intuition in conducting outdoor activities and managing emergencies. Examples include:

-

Operating policies, procedures and guidelines

-

Mandatory use of checklists (for instance, before driving a vehicle)

-

Algorithms or decision trees, for example regarding decision-making about medical evacuations

-

Decision points such as pre-established turnaround times in mountaineering

Social norms marketing principles to counteract groupthink

Group dynamics management to diminish confirmation bias, groupthink, and sunk cost bias:

-

In groups, obtain the opinions of members before the leader provides an opinion.

-

Solicit anonymous opinions.

-

Establish a “devil’s advocate” or “red team” entity that takes a contrary position and attempts to find weaknesses in the established plan.

-

Conduct pre-mortems. Even just asking one’s self, “would I like explaining to my boss how we got into this situation?” may help.

Unbiased external viewpoints. External risk management reviews can counteract a group’s cognitive limitations like groupthink and confirmation bias, particularly when they are truly independent and not subject to conflict of interest. Risk Management Committee members who are psychologically independent of the organization can also help.

Note: this material adapted from the book Risk Management in Outdoor Programs: A Guide to Safety in Outdoor Education, Recreation and Adventure.